Learning Notes | Integration of OpenAI with Enterprise Apps | Part 1 - Intro and Architecture

Building applications integrated with AI used to be much simpler. Before ChatGPT, the main idea of building AI-infused apps was divided into two steps:

- Machine learning engineers/data scientists create models and then publish these models through a REST API endpoint.

- Application developers call the REST API endpoints by passing deterministic parameters.

Some examples are the REST APIs from Azure Cognitive Services or Custom ML Models. And the first thing I did when I got access to OpenAI’s REST APIs was to launch Postman, try out the APIs, and then integrate by simply calling the APIs from my chatbot. Most developers will probably follow the same pattern as they get into this.

But as I get deeper into this, I realize that integration for common enterprise use cases is not simple.

- How to integrate OpenAI with internal knowledge search (internal documents, databases, SharePoint, etc.)

- How to integrate OpenAI with other systems like SAP, ERP, CRM, HR systems, IT ticketing systems, etc.

- How to effectively keep track of chat conversation history

- How to implement prompts into code in a configurable way (and not make them look like magic strings)

- How to minimise tokens used

- How to work within and around the service limits - more specifically, around the max requests/minute

- and more…

Who this is for: myself and like-minded readers (mostly developers)

I am writing this post primarily for myself. Yes, these are my personal notes to help me process information as I read through articles, watch YouTube videos, read Discord chats, and go through GitHub repositories. I am a developer at heart, so when I started getting into this area of LLMs and how others chain things into code, I knew that I had to take longer notes to organize my thoughts.

There are 4 posts on this topic:

- Intro and Architecture (this post)

- Development with Semantic Kernel

- Development with LangChain

- LangChain Beginner Coding

Having said this, I won’t be covering areas which I’ve learned in the past. This post is best read if you have a working knowledge of the following:

- A foundational understanding of OpenAI’s GPT. Especially on token limits and why it’s so important.

- Building chatbots using Azure Bot Service and Power Virtual Agents

- Knowledge of concepts like Topic, Dialog, and Intent

- Experience with using the Completion and ChatCompletion APIs of OpenAI and Azure OpenAI.

- Some idea of prompt engineering, including meta-prompts, instructions, context, few-shot examples, etc.

As I’m writing this to process information, please expect this post to be incomplete. I will write new posts as I learn more. I welcome readers to raise topics for constructive discussion in the comments below.

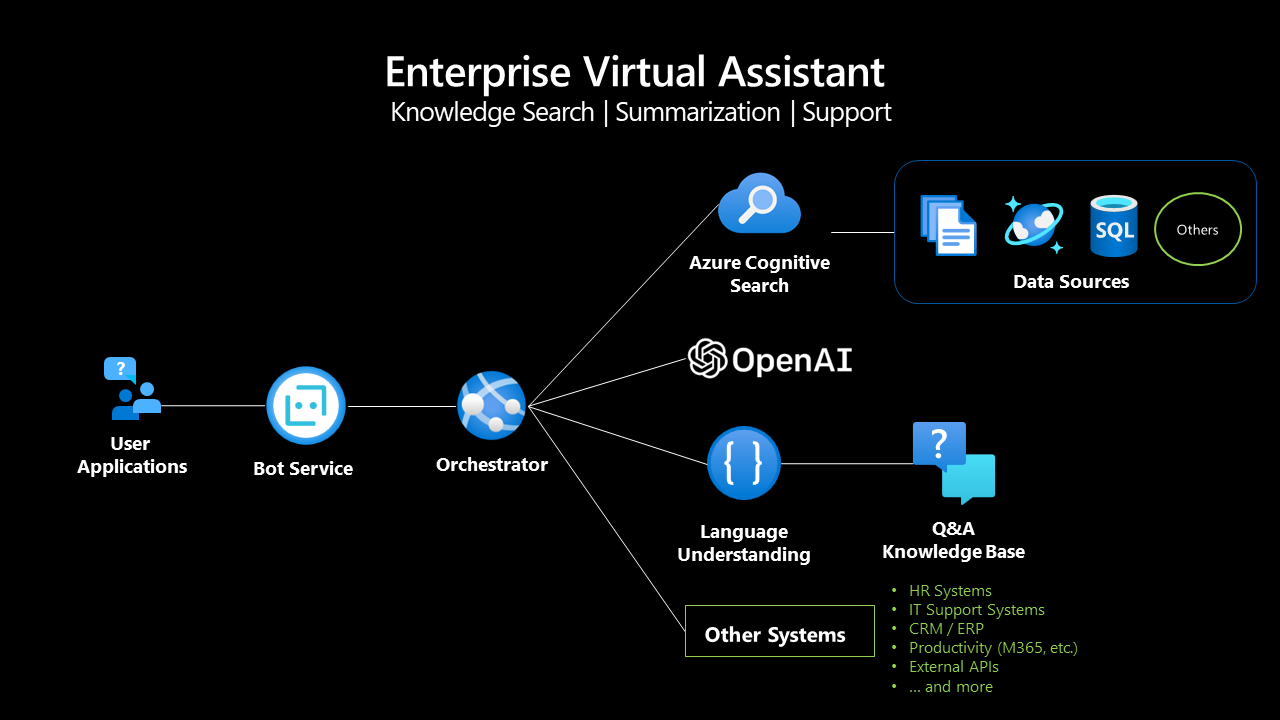

Initial High-Level Architecture and Components

One of the first things that come to mind when ChatGPT came out is “Can we implement a similar app for our business?”. This is primary use case that I am zooming into. For example, in the case of an employee HR virtual assistant/chatbot, the high-level architecture should be something like this:

Front-End

The front-end is the primary user-facing application. For chatbots, this could be:

- a Custom Web App

- a Custom Mobile App

- a bot accessed through chat applications like MS Teams or Slack

In development, the front-end should contain minimal business rules. Probably just to manage the Topic or Dialog conversation flow. It then calls the Orchestrator and sends the response back to the user.

Back-End: The Orchestrator Pattern

The orchestrator is the central, magical, backend component. This service orchestrates the inputs and outputs from various dependencies (OpenAI, LUIS, Azure Search, Databases, etc.) and stitches everything together.

- This pattern is seen in the Copilot services that Microsoft has been releasing recently. Notice that the diagrams for GitHub Copilot, M365 Copilot, D365 Copilot, and Security Copilot all have a “Copilot Service” between the application with LLM models and other services.

- Also note that Microsoft mentions “LLM” in the diagrams, not “GPT-4”. This is because the orchestrator service can use different LLMs to achieve its objective.

Two reasons why LUIS is in the architecture

- While prompt engineering may be possible to understand the intent, I prefer LUIS because it can be trained, unlike GPT (where P stands for Pre-trained).

- By understanding the intent, we can determine which systems to query. For example, a search intent will query Azure Cognitive Search and a query intent such as “How many vacation days do I have left?” will query an HR system.

The orchestrator should be developed in such a way that it would understand the intent of the message and execute a series of steps based on this intent. For example, if an employee asks, “Can I take a vacation for a whole month?” in Filipino, the logic should be something like this:

sequenceDiagram

actor User

User->>Chatbot: Pwede ba ako mag baksyon<br/>ng isang buong buwan?

Chatbot->>Orchestrator: Send message

Orchestrator->>OpenAI: Translate to English<br/>and Extract Keywords

OpenAI-->>Orchestrator:

rect lightgray

Note over Orchestrator,OpenAI: Create Plan

Orchestrator->>LUIS: Get Intent(s)<br />(consider chat history)

LUIS-->>Orchestrator: Policy Search<br />and Employee Query

end

rect lightblue

Note over Orchestrator,OpenAI: Search Skill

Orchestrator->>Cog Search: Search leave policy documents

Cog Search-->>Orchestrator:

Orchestrator->>OpenAI: Summarize

OpenAI-->>Orchestrator:

end

rect lightgreen

Note over Orchestrator,OpenAI: Query Skill

Orchestrator->>HR System: Get employee vacation<br/>leave balance

HR System-->>Orchestrator:

end

Orchestrator->>OpenAI: Combine/Summarize Information,<br />and Generate Response (in Filipino)

OpenAI-->>Orchestrator:

Orchestrator->>Orchestrator: Manage Chat History

Orchestrator->>Chatbot: Return message

Chatbot->>User: Meron tayong benepisyo na<br/>pang bakasyon sa kumpanya.<br />Ayon sa ating systema,<br />meron ka pang 12 na araw<br />na pang bakasyon<br/>na pwede mong gamitin.

This is where libraries like Semantic Kernel and LangChain come in. These libraries help developers:

- Manage the conversation history, something which the ChatCompletion API expects developers to figure out.

- Plan the approach based on the intent.

- Implement “chaining” for the approach (i.e. Chat Completion → LUIS → Cog Search → Completion.)

- Manage memory and service connection requirements (i.e. conversation history, external APIs, etc.)

LangChain vs Semantic Kernel (Initial Perspective) I am still forming a better perspective for choosing between LangChain and Semantic Kernel (SK), but one primary consideration is the developer skillset.

- LangChain is currently the “most mature” (but fairly new) framework for using large language models.

- It has a large open-source community. The first commit was on October 2022.

- It supports Python and TypeScript, where Python has more features.

- Most online articles teach LangChain using Jupyter notebooks, which leads me to think that it is built for ML Engineers who are used to using notebooks. For one, LangChain is not referred to as an “SDK”.

- And so, it is up to the skilled application developer to figure out how to organize code and use LangChain.

- LangChain is founded by Harrison Chase, who is an ML Engineer by profession.

- The LangChain open-source community is very active in its contributions.

- Semantic Kernel (SK) is “more recent” but is built with developers in mind. The first commit was on February 2023.

- It is primarily for C# developers; it also supports Python but is in preview support (see feature parity document.)

- Because it is built for developers, it is referred to as a lightweight SDK and helps developers organize code into Skills, Memories, and Connectors that is executed through the Planner (read more here.)

- There are lots of samples with an orchestrator web service in the sample code.

- SK is founded by an organization (Microsoft) known for software development. The open-source community is smaller but is also quite active.

- Because it is founded by Microsoft, it has an official support page and LinkedIn Learning course.

- And because SK is built with apps in mind, there is an MS Graph Connector Kit for scenarios that require integration with calendars, e-mails, OneDrive, etc.

- At the time of this writing, the GitHub Repo shares that Semantic Kernel is still in “early alpha.” (LangChain, on the other hand, is purely community-driven, and there is no such mention.)

As you can imagine, there are pros and cons to choosing one or the other. The best way to form a perspective is to try it out. This is something I’ll write about at a later date.

Administration Module

The administration module (not in the diagram above) is an often overlooked component. Most enterprise organizations likely require a custom-developed administration app. This app should:

- Understand if users are satisfied with the OpenAI-infused application.

- Help understand if questions from users are getting answered.

- Ability to update the data sources to improve answers.

- Ability to modify prompts, especially meta prompts.

- and other requirements.

Administrators could consider working with what’s available in the various components for pilot implementations. Another reason I like LUIS and Question Answering is that they have excellent built-in administration modules.

How about reviewing users’ conversation history? We should respect user privacy, so recording chat history is NOT RECOMMENDED.

For example, a conversation where an employee is asking about their mental illness condition should not be saved in a system that many admins can view.

Instead, implement an in-app survey/feedback mechanism to understand the chatbot’s effectiveness.

Next Steps

That’s it for now. My next step is to try LangChain and Semantic Kernel (SK) hands-on. I will post more as I learn more, so stay tuned.