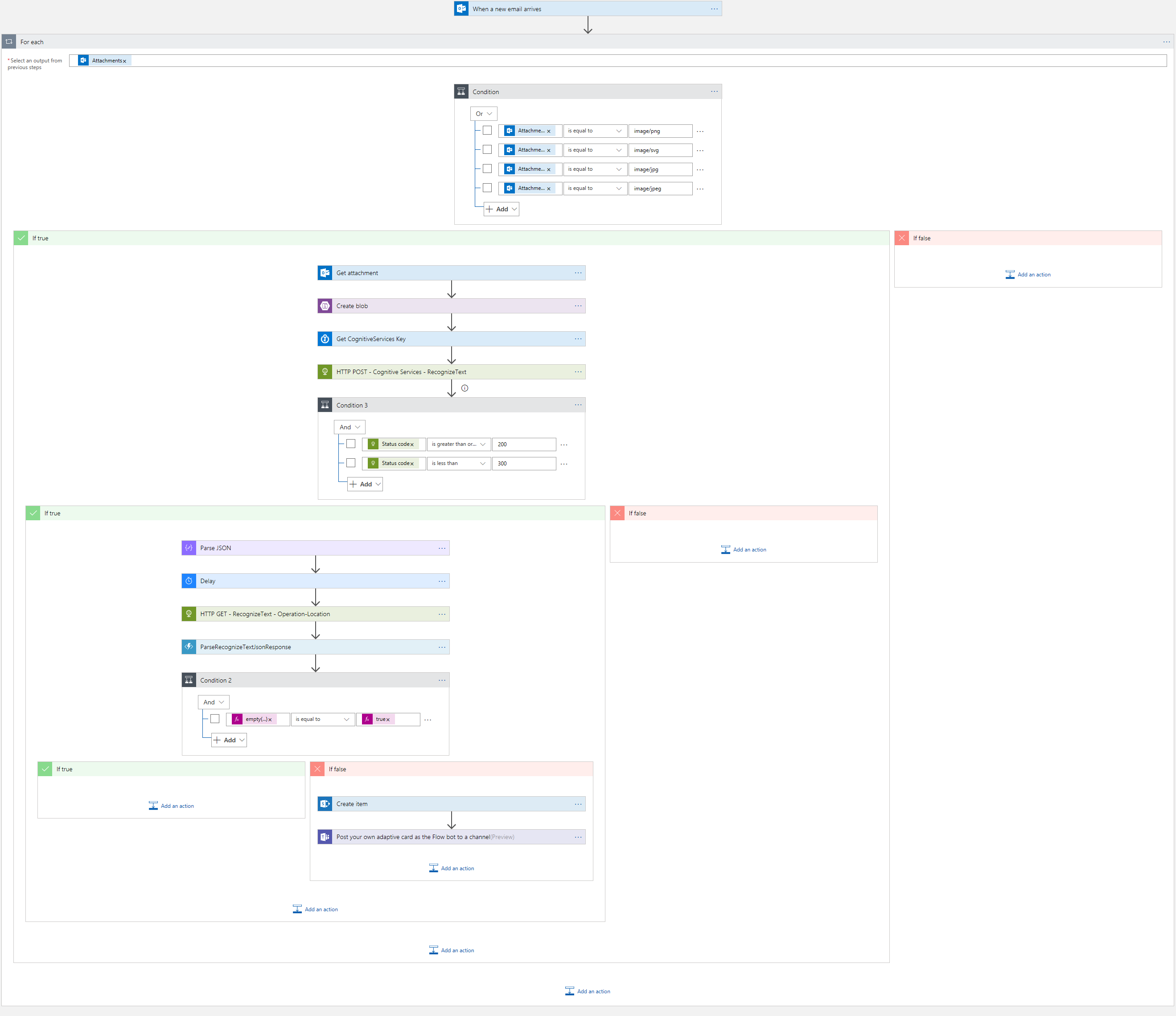

Handwriting Recognition using Logic Apps, Cognitive Services, E-mail, SharePoint and Teams

We have a lot of Surface Hubs in our new office which we often use as digital whiteboards. After the meeting, we then send the writings to our mailboxes which we receive as image attachments. The challenge with this is that the text in image attachments is often unsearchable. So we thought, hey… isn’t this really simple to solve using Cognitive Services and Logic Apps? Yes, it really is. We could:

- Use Azure Logic Apps (or Microsoft Flow) to watch an inbox for e-mails with image attachments

- Send the image to Microsoft Cognitive Services – Computer Vision

- Extract the text and save it in a SharePoint list, this way we can use SharePoint’s built-in search feature to search for all our whiteboards

- Send a notification to a teams channel

Actual Implementation

Most of the actions in this diagram are already self-descriptive, so I’ll just expand on a few actions.

E-mail Trigger

The trigger is when an e-mail arrives in our inbox with the subject containing the word “ocr”. We wanted to keep it simple so that it’s easy to type using the on-screen keyboard of the Surface Hub.

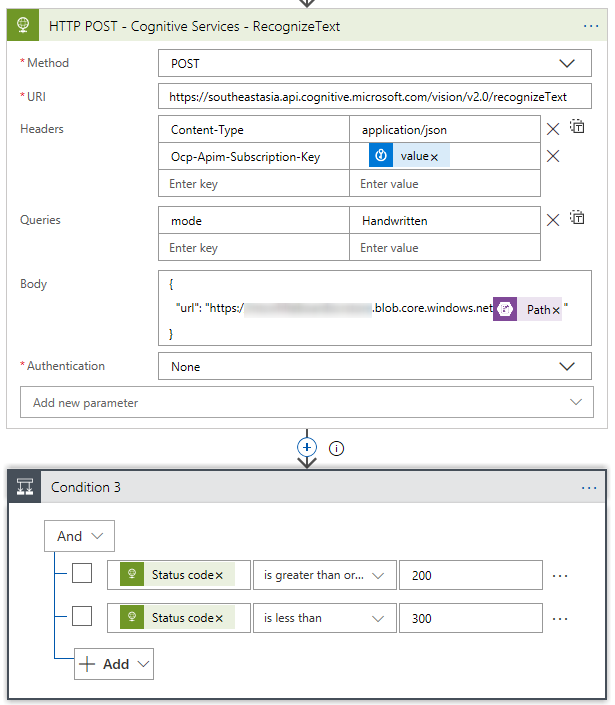

Computer Vision Cognitive Service

We used the Computer Vision Recognize Text API which required the image file to be in an accessible URL. So we first created/uploaded an image blob in an Azure Storage account.

Handling the Service Response

One of the things that got us is when there are images in the e-mail that doesn’t adhere to the service’s input requirements:

- Supported image formats: JPEG, PNG and BMP.

- Image file size must be less than 4MB.

- Image dimensions must be at least 50 x 50, at most 4200 x 4200.

To handle this, we created a condition to check the status code of the HTTP POST request

In addition, we configured the condition to run even if the previous action fails

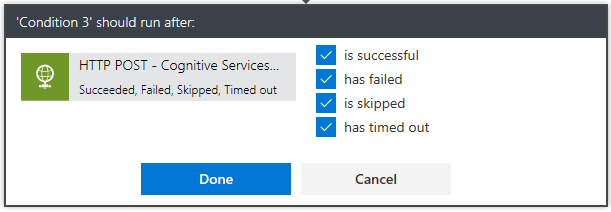

Extracting the Text from the Response

We’ve also learned that the Recognize Text API does not immediately output the text extract. The first call actually responds with an “Operation-Location” URL which we will need to make another HTTP GET request on. The Operation-Location URL is also not immediately available, so we had to delay by 10 seconds before doing so.

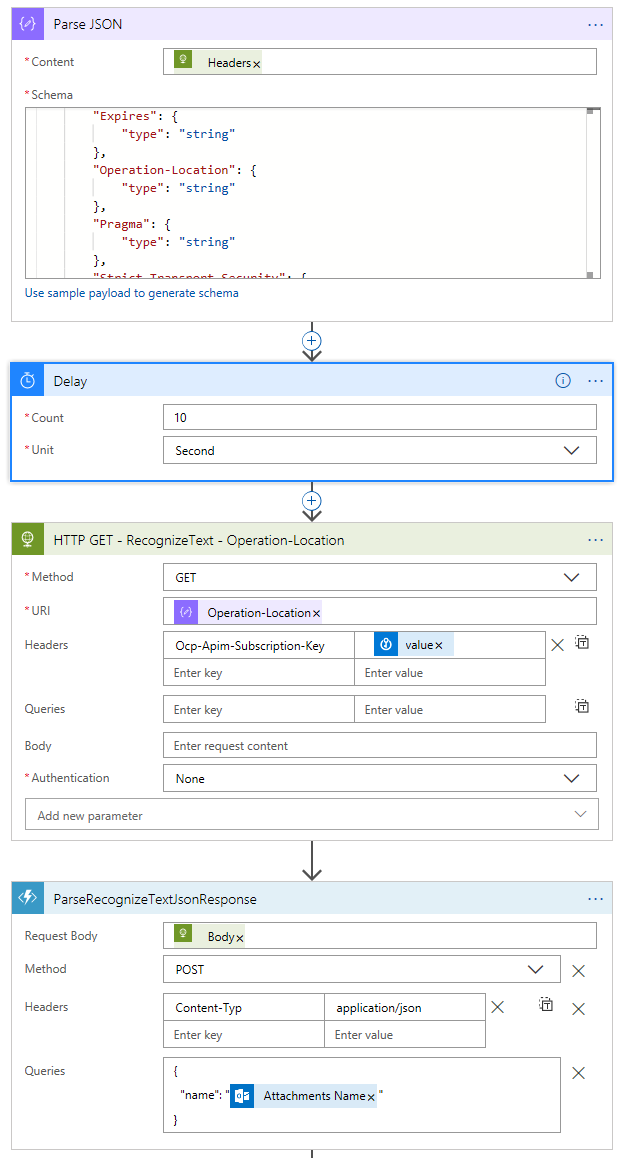

Finally, we created an Azure function to read the response

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

public static class ParseRecognizeTextJsonResponse

{

[FunctionName("ParseRecognizeTextJsonResponse")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("C# HTTP trigger function processed a request.");

string name = req.Query["name"];

//get data from request body

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

name = name ?? data?.name;

//get array of lines object

JArray linesData = data["recognitionResult"]["lines"];

//Append each read line to a final output string

string returnString = "";

foreach (JObject item in linesData)

{

string text = (string)item["text"];

returnString = returnString + text + "\n";

}

//Check for correctly formatted query before returning result

return name != null

? (ActionResult)new OkObjectResult(returnString)

: new BadRequestObjectResult("Please pass a name on the query string or in the request body");

}

}

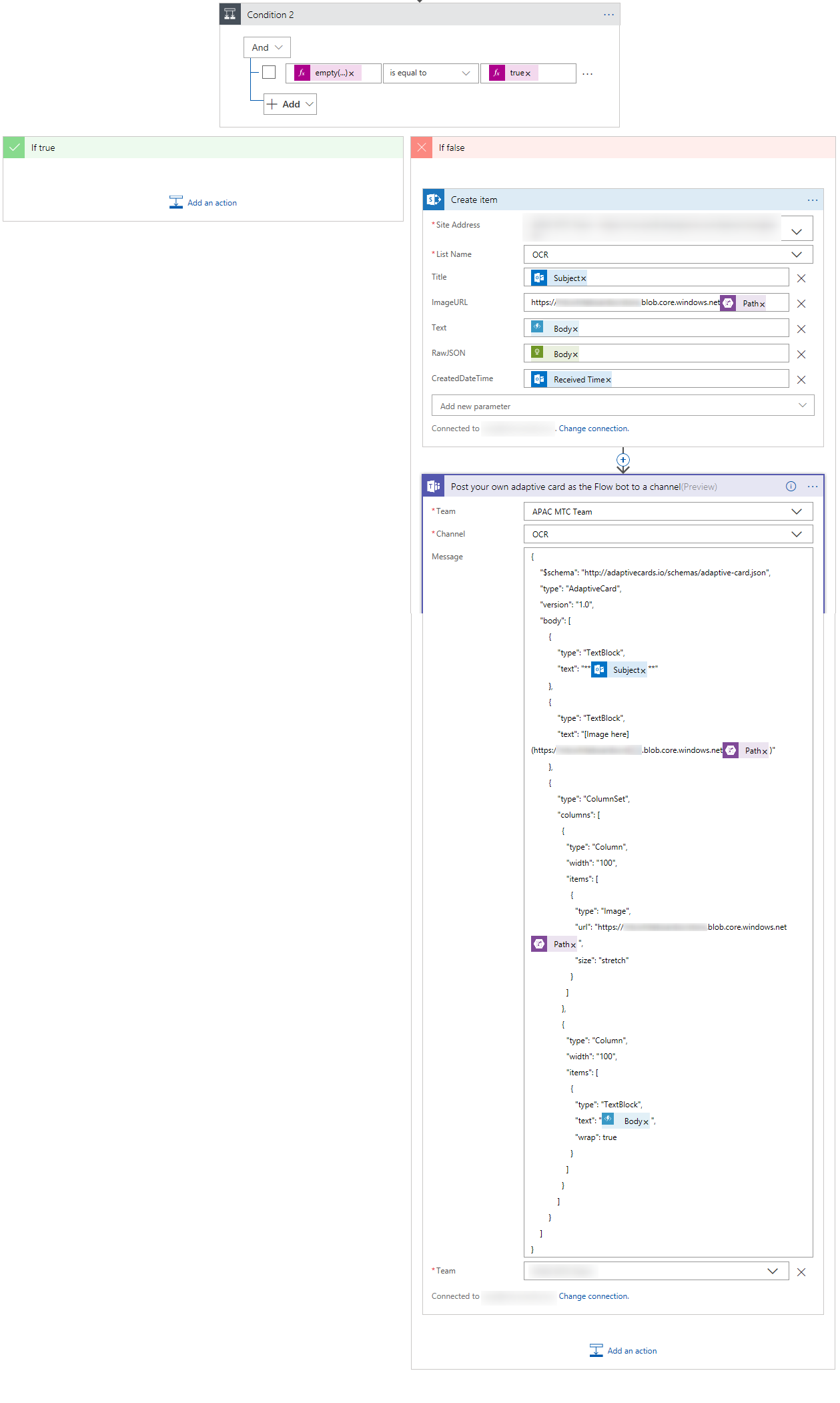

SharePoint and Teams

The rest is easy, we added a connector to our SharePoint list and MS Teams channel. If there’s anything complex here, it’s creating a nice custom adaptive card on teams. This site of adaptive card samples helped a lot.

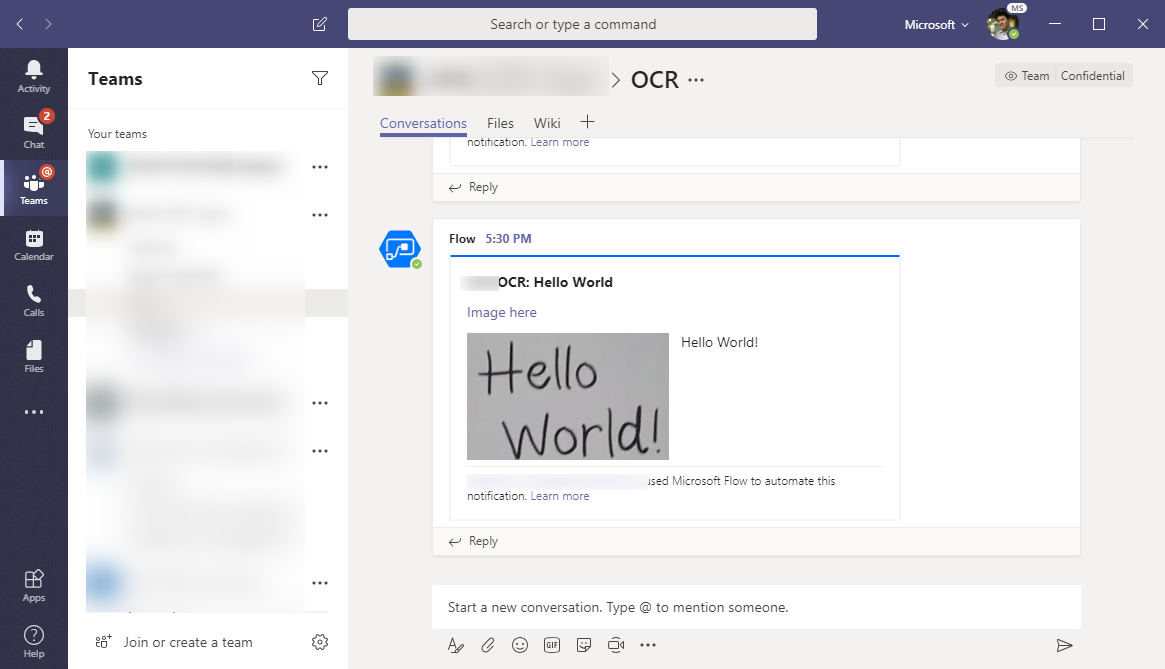

Output

It works!