Learning Notes | Integration of OpenAI with Enterprise Apps | Part 3 - Development with LangChain

In my last two posts, I wrote about my high-level thoughts on infusing OpenAI with enterprise applications. Then I explored Semantic Kernel as the lightweight SDK for developing the Orchestrator Service using C#. In this post, I am writing my notes on using the LangChain framework with Python, including observations on its differences with Semantic Kernel.

What is LangChain?

LangChain is a “framework for developing applications powered by language models.” It is designed to be able to connect a language model to other sources of data and allow a language model to interact with its environment. Since LangChain was created before ChatGPT came out, many blogs and videos share how to use LangChain using models other than what’s available in OpenAI (the most I’ve seen are from Hugging Face.)

LangChain supports both Python and Typescript. While the Python library is more advanced, its Typescript version is considered stable and also has its own active community (see feature parity document.)

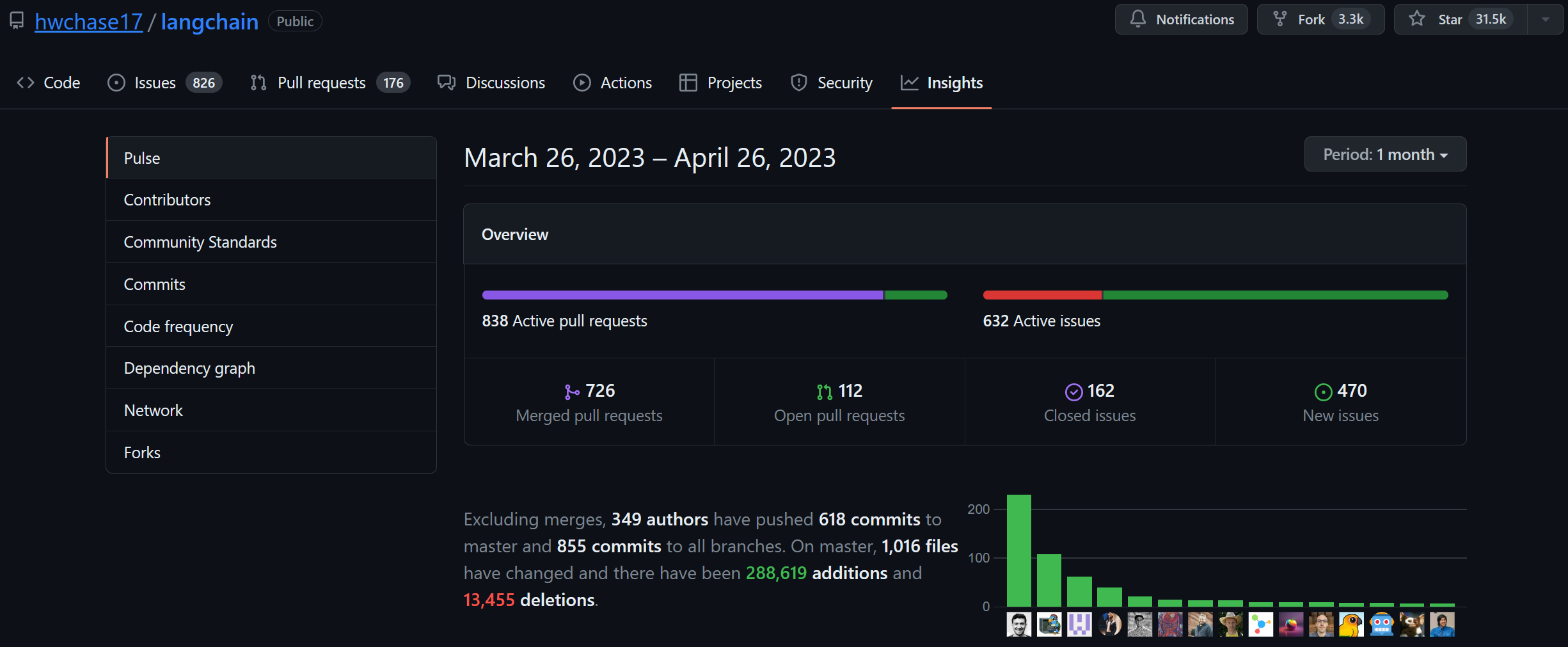

LangChain was initially created by Harrison Chase, an ML engineer at the time of this writing. LangChain is fully open-sourced with an active community of >500 contributors and thousands of forks and stars.

I think LangChain was (or maybe still is) created primarily for Machine Learning (ML) engineers. I say this because most public examples, including the official quickstart guide, assume you use Jupyter notebooks. Having said this, experienced ML engineers will like LangChain because it allows one to mix multiple LLM models (a typical application developer would likely only be interested in one, like OpenAI’s ChatGPT.) If you are the latter, keeping this in mind will help make it easier to learn LangChain.

LangChain Framework Concepts

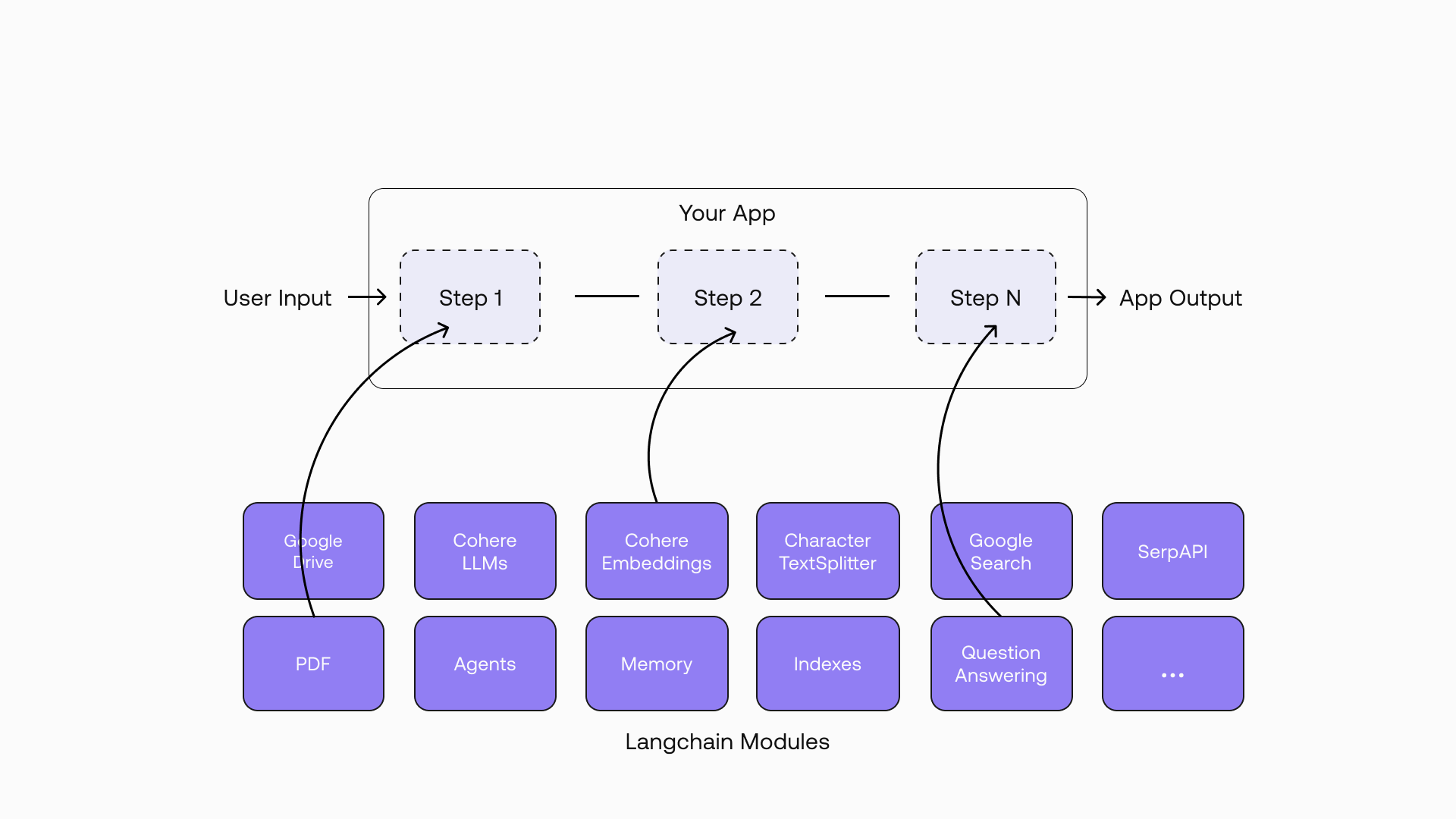

LangChain does not have a conceptual framework diagram (unlike Semantic Kernel.) A quick image web search will show different architectures from different people. The best diagram I’ve found is from Cohere:

Source: Multilingual Semantic Search with Cohere and Langchain

Source: Multilingual Semantic Search with Cohere and Langchain

This diagram shows that LangChain provides an easy way to chain steps, where the output of step 1 can quickly be passed as an input to step 2, and so on. Each step can be implemented using different LLMs, hence the name “LangChain” or language chain. My guess is that LangChain started this way but has now evolved to the ability to use one or more components on every step. The six main components of LangChain are:

- Schema: the data type — Text, ChatMessages, Examples, or Document,

- Models: the AI model — LLMs, Chat Models, or Text Embedding Models,

- Prompts: the prompt (input) to the model — PromptValue, Prompt Templates, Example Selectors, or Output Parsers,

- Indexes: refer to the way document are structured so that LLMs can best interact with them (think DB/search indexer but for the datasource of your app) — Document Loaders, Text Splitters, VectorStores, or Retrievers,

- Memory: is for storing and retrieving data in the process of the conversation — Chat Message History, and

- Chains: is a generic concept about the sequence of modular components (#1-5 above) — Chain, LLMChain, Index-related Chains, Prompt Selector. It is considered a “main” component because you can also chain in other chains.

With these concepts in mind, it is now up to the skilled developer to organize code in a way that is easy for the rest of the team to work with. I must say, however, that all of the sample codes I’ve seen have the prompt hardcoded in constant variables inside Python functions.

If you know the best practice to properly organize prompts and avoid “magic strings” with LangChain, please share it in the comments section.

The LangChain Agent

The agent is what you use when you do not have a predetermined chain of calls to LLMs/other tools but potentially an unknown chain that depends on the user’s input. The concept is very similar to the SK Planner, where, in these types of chains, you define the tools available to an “agent” and let it decide which tools to use depending on the user input.

1

2

3

4

5

6

7

8

9

10

# From https://github.com/hwchase17/langchain/agents/initialize.py

def initialize_agent(

tools: Sequence[BaseTool],

llm: BaseLanguageModel,

agent: Optional[AgentType] = None,

callback_manager: Optional[BaseCallbackManager] = None,

agent_path: Optional[str] = None,

agent_kwargs: Optional[dict] = None,

**kwargs: Any,

) -> AgentExecutor:

LangChain also includes commonly required agents such as the ConversationalAgent. The idea is to include the tools available to the agent using prompt engineering, so that the LLM can instruct the agent on the next action to execute. See the this prompt example from the ConversationalAgent:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

# From https://github.com/hwchase17/langchain/agents/conversational/base.py

def create_prompt(

cls,

tools: Sequence[BaseTool],

prefix: str = PREFIX,

suffix: str = SUFFIX,

format_instructions: str = FORMAT_INSTRUCTIONS,

ai_prefix: str = "AI",

human_prefix: str = "Human",

input_variables: Optional[List[str]] = None,

) -> PromptTemplate:

"""Create prompt in the style of the zero shot agent.

Args:

tools: List of tools the agent will have access to, used to format the

prompt.

prefix: String to put before the list of tools.

suffix: String to put after the list of tools.

ai_prefix: String to use before AI output.

human_prefix: String to use before human output.

input_variables: List of input variables the final prompt will expect.

Returns:

A PromptTemplate with the template assembled from the pieces here.

"""

# From https://github.com/hwchase17/langchain/agents/conversational/prompt.py

PREFIX = """Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

TOOLS:

------

Assistant has access to the following tools:"""

FORMAT_INSTRUCTIONS = """To use a tool, please use the following format:

```` ``` ````

Thought: Do I need to use a tool? Yes

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

```` ``` ````

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

```` ``` ````

Thought: Do I need to use a tool? No

{ai_prefix}: [your response here]

```` ``` ````"""

SUFFIX = """Begin!

Previous conversation history:

{chat_history}

New input: {input}

{agent_scratchpad}"""

It is highly possible that the LangChain Agent inspired the SK Planner as the concepts are quite similar (Agent = Planner and Tools = Skills/Functions.) Here are a my observed differences:

- The LangChain Agent mainly requires the LLM and the list of tools it can use, while the SK Planner also asks for the list of skills/functions it should exclude from using.

- Every tool in LangChain is implemented in Python, with a prompt declared as a

stringconstant. On the other hand, SK distinguishes between semantic and native skills. The clean-code beauty is that semantic skills are stored inplain textfiles.- The LangChain Agent determines the next step on every step (based on its current state), while the SK Planner plans the sequence of steps at the very beginning.

- LangChain includes some pre-built agents in its library — chat, conversational, MRKL, ReAct, and self-ask-with-search agents. SK simplifies with a single Planner.

- The list of pre-built tools/skills also differs. LangChain has more pre-built tools, but SK has an advantage when working with the Microsoft Graph.

- LangChain feels more like a sophisticated library for chaining LLM inputs and outputs than a framework. This is my initial perspective until I learn more.

How to Get Started

The instructions on how to get started are shared here. But as mentioned above, this guide assumes you are already familiar with Jupyter notebooks.

If you are new to Jupyter Notebooks, I am too! I have been writing code for the last 30+ years and have never needed to learn how to use Jupyter Notebooks… until now. I am planning to use VS Code to run Jupyter Notebooks. In case you’d like to do the same, here’s what you need to do:

- Install Anaconda, which already includes Python. Don’t install Python directly (this has something to do with Jupyter needing a conda kernel instead of standard Python.)

- Read this guide for using Jupyter Notebooks in VS Code.

- (Optional) If you’re still having problems, YouTube is your friend 😀.

Also, see langchain.com for the links to the Javascript documentation, the GitHub repos, and the Discord community.

Next Steps

Now to get my hands dirty and go through that quickstart guide.