Using OpenAI and Power Virtual Agents for Conversational AI

OpenAI’s ChatGPT has created a sensation in the world today. And while it’s fun to use it personally, many have wondered how to implement an enterprise version of ChatGPT, which integrates with their organization’s data.

This article is about how I used Power Virtual Agents to rapidly develop an OpenAI chatbot. In this implementation:

- I am using an approach that does NOT require fine-tuning the model.

- I am simply injecting essential information into the prompt.

Disclaimer: While I’m using the term “ChatGPT” below, note that I am only using Azure OpenAI’s completion endpoint. Azure OpenAI is expected to release a ChatGPT service that is more fine-tuned to chat and can understand the context of previous messages. While this service is not yet out, the example below shows what can be done today.

Implementation

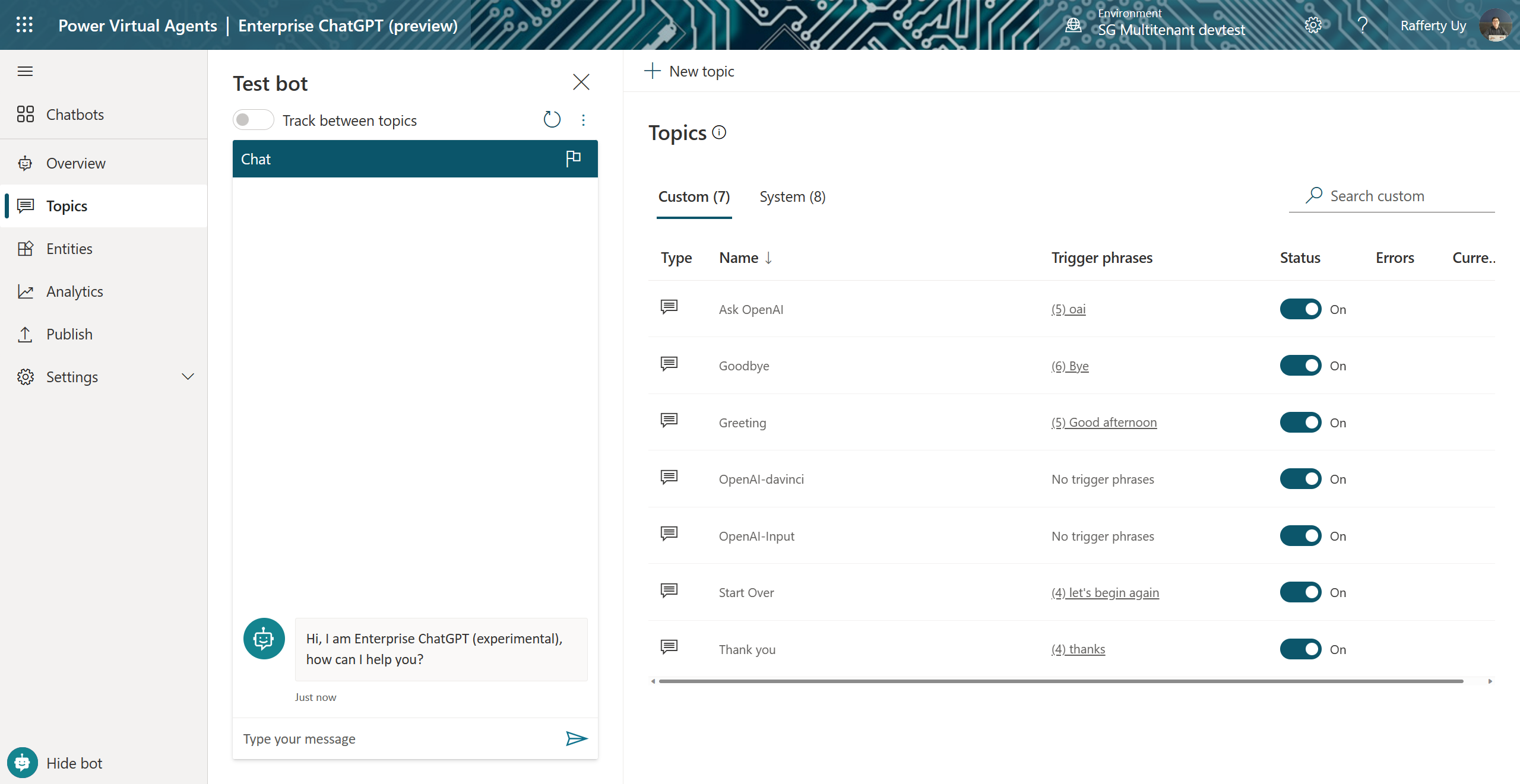

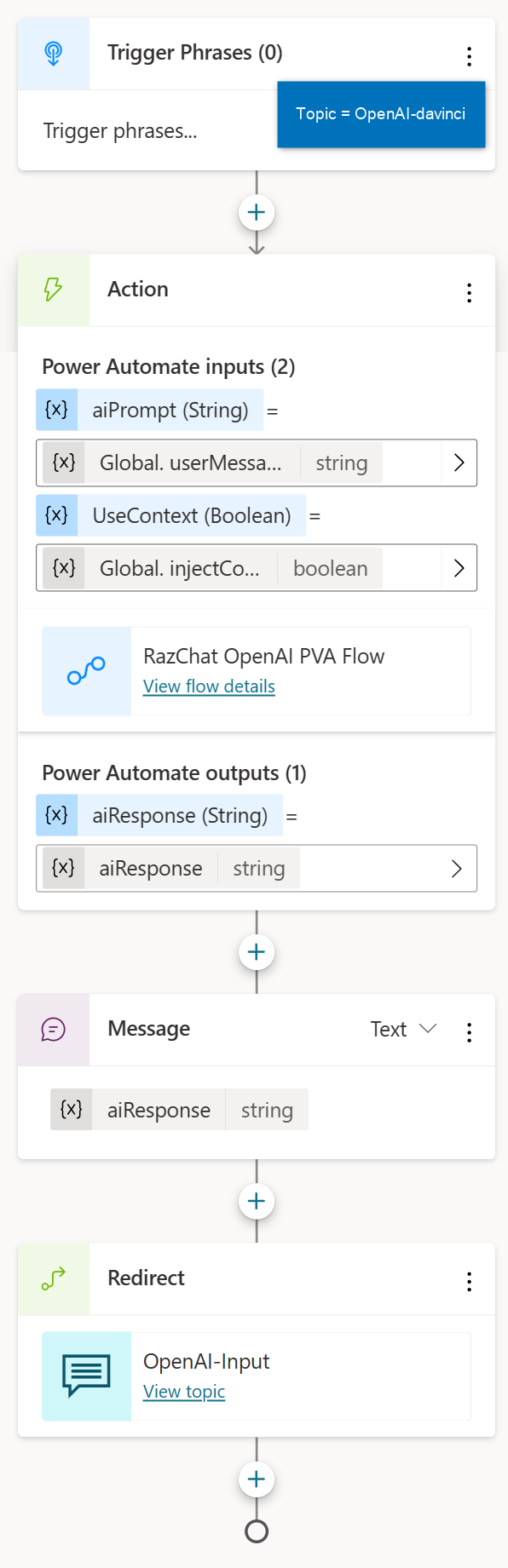

Power Virtual Agent

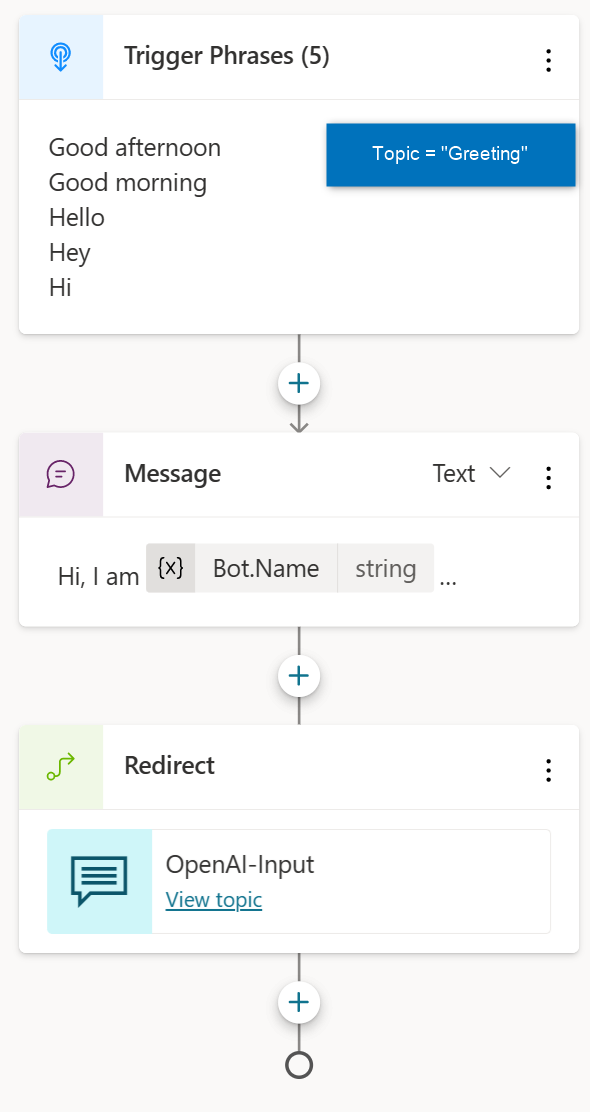

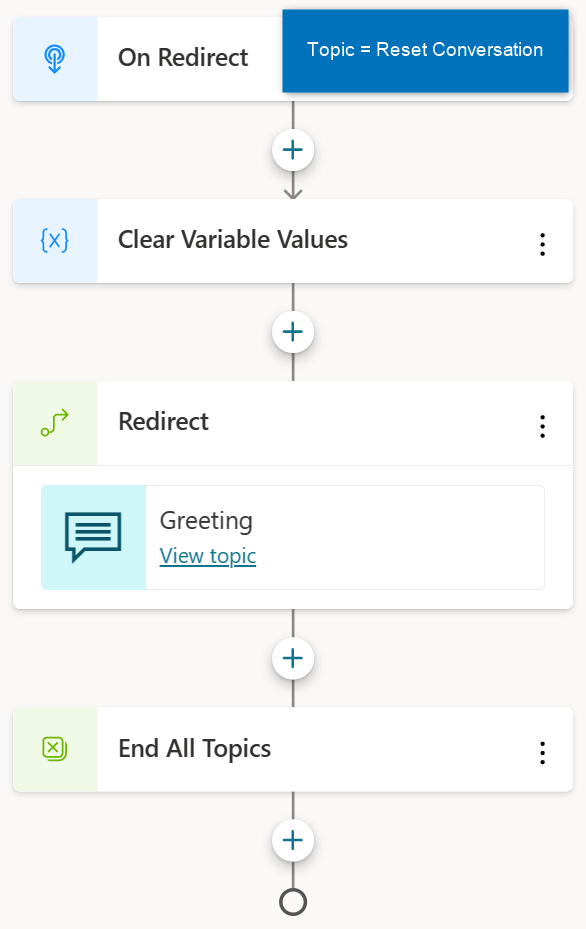

Power Virtual Agents is one of Microsoft’s low-code/no-code tools for creating chatbots (The other is Bot Framework Composer.) There is a lot to be learned about chatbot design, but for this post, one of the primary things is to understand the “topic” or “intent” of the message and start the chat dialog from there. Given that we are only interested in a dialog that calls the OpenAI service, my implementation here will:

- Always default to the

OpenAI-Inputtopic - The end of a chat message will restart back into

OpenAI-Input(hence theGreetingandReset Conversationtopics are modified to callOpenAI-Input) - I also modified the

Fallbacktopic to point the different branches back toGreeting

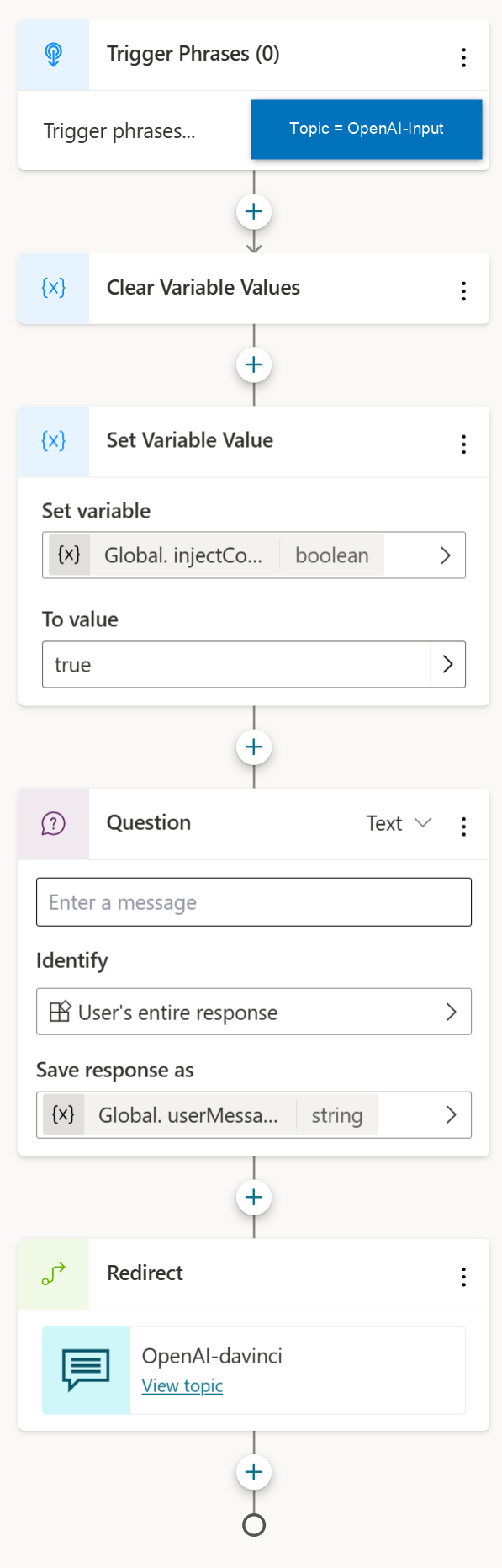

The main topics that call OpenAI are OpenAI-Input and OpenAI-davinci. There are two topics because this allows me to loop back the conversation to the same topic.

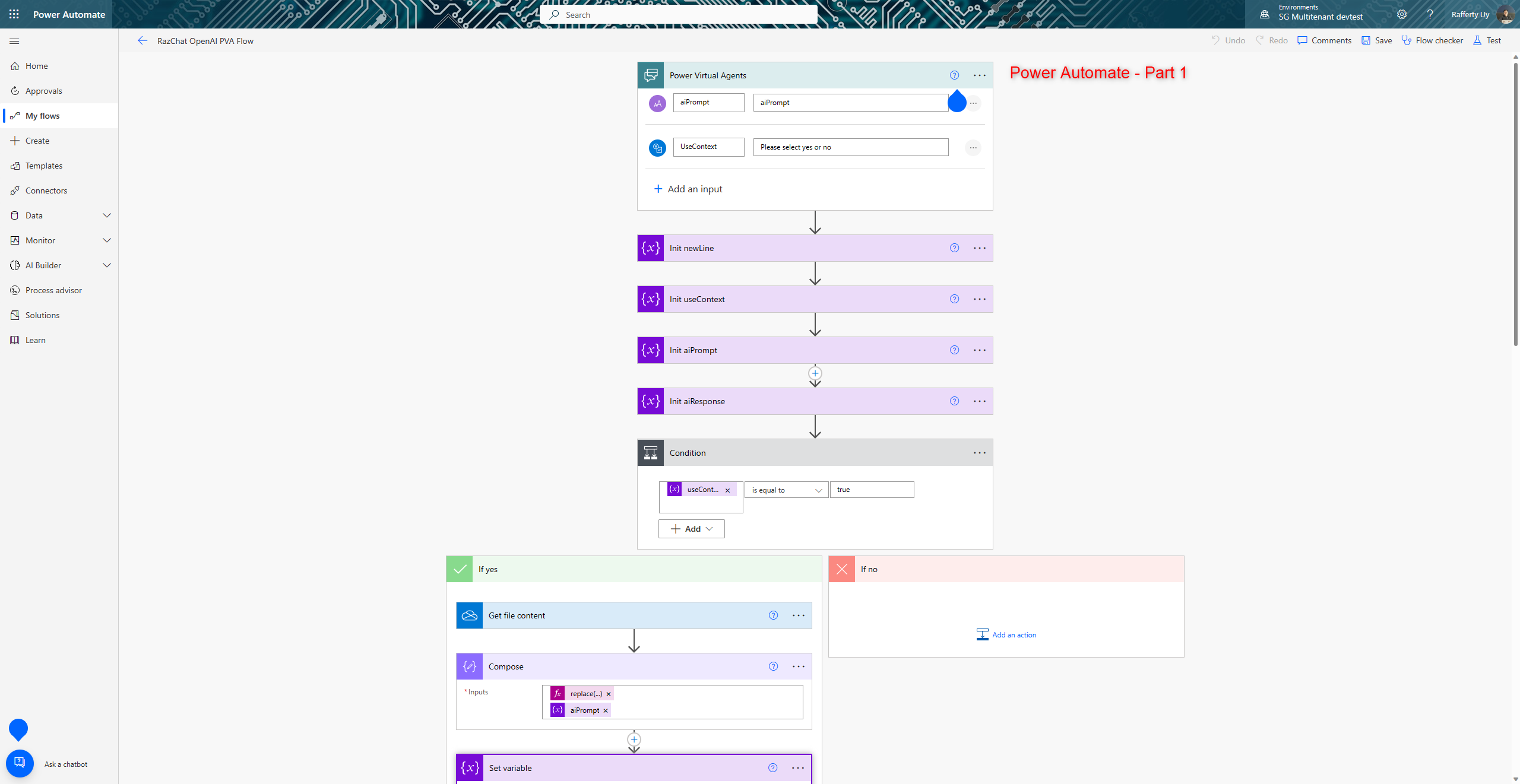

Power Automate

Power Automate is one of Microsoft’s low-code/no-code tools for enterprise integration (The other is Azure Logic Apps.) This implementation:

- Uses the HTTP action to call the Azure OpenAI Completion REST API

- The Power Automate injects data from a OneDrive file into the prompt. (Note that this can be replaced with your system integration, such as making a SQL query to your data lake and injecting the result into the prompt)

- For reusability, I added a

UseContextinput into the Power Automate. If =false, then data will not be injected into the prompt - Because the prompt is passed as a

json string, the injected data must be escaped forjsoncharacters. This is the replace expression that is in one of the screenshots

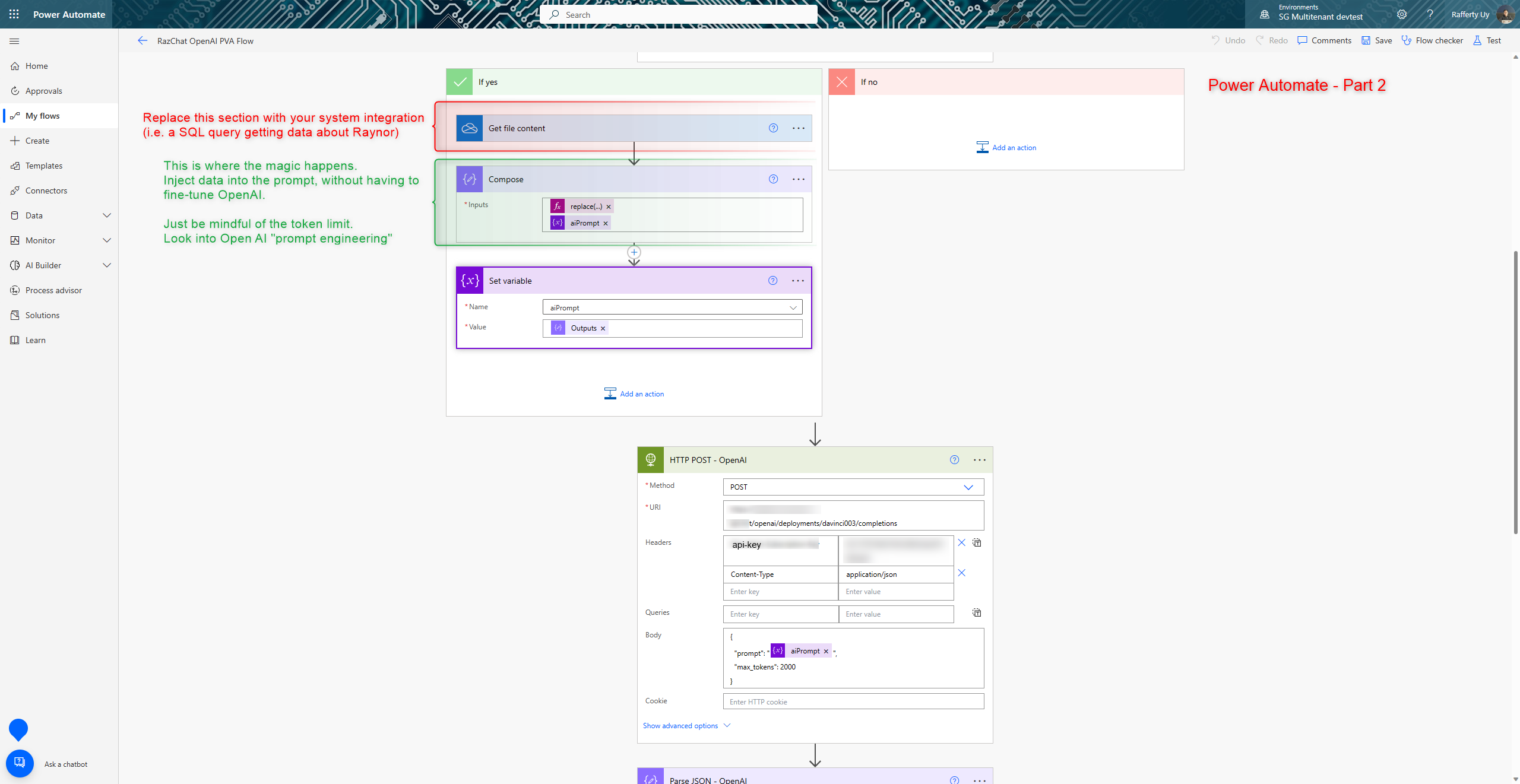

Prompt Composition and OpenAI REST API call

Prompt Composition and OpenAI REST API call

Because the prompt is passed as part of the json body, I used Compose to escape the json characters of the injected data.

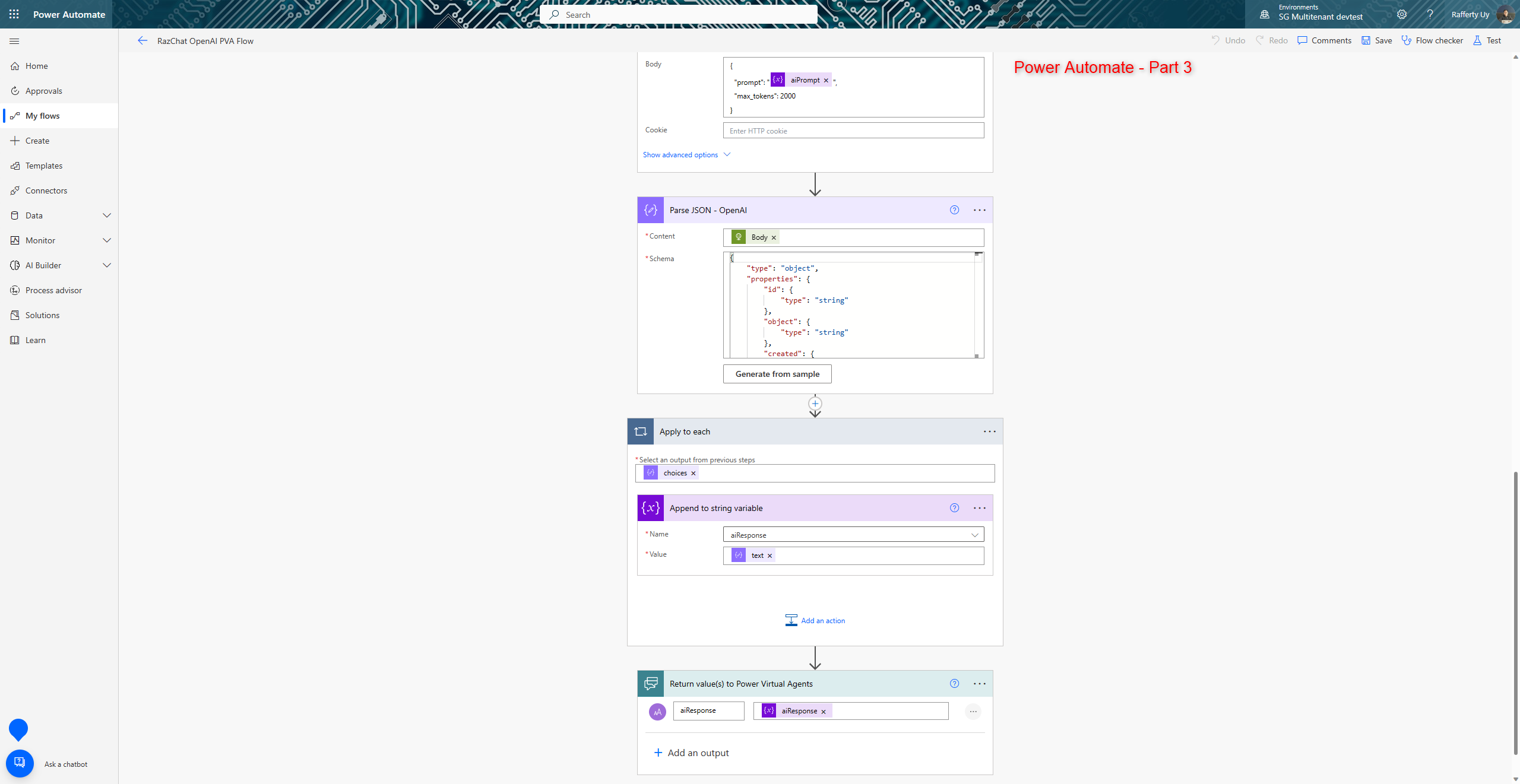

Parse response and send back to the Power Virtual Agent

Parse response and send back to the Power Virtual Agent

Output

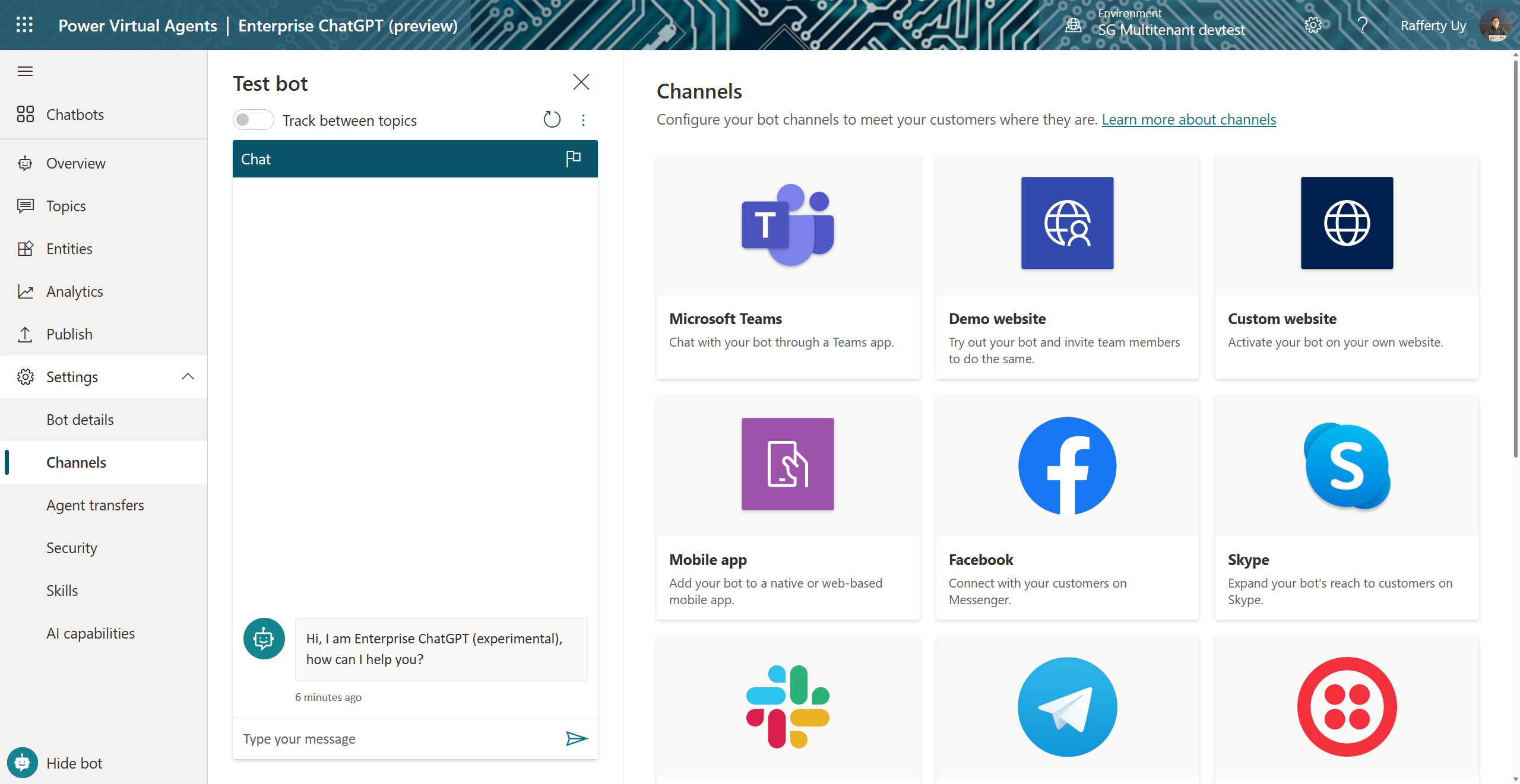

After implementation, publish the chatbot and add the channels to make the bot reachable to your users (more here).  Power Virtual Agent Channels

Power Virtual Agent Channels

Here is what it looks like when deployed on Microsoft Teams* and **Demo website.  Output

Output

Have fun!

Additional Challenges

- Implement additional conversation topics to the dialog and inject relevant information based on the conversation

- Abstract the OpenAI REST API with Azure API management for throttling requests and caching results

- Implement the chatbot using Bot Framework Composer deployed to Azure Bot Services

- Update the code to use the ChatGPT APIs when it’s out