The 5 Ways to Address Azure OpenAI Token Limitations

It has been almost a year since organizations have tried different approaches to address the Tokens per Minute limitations of Azure OpenAI. In this post, I attempt to summarize the 5 approaches which I’ve encountered so far. These approaches are not mutually exclusive. In fact, they are often used in combination. The choice of approach(es) depend on the organization’s requirements, budget, and technical capabilities.

- Approach 1: Round-robin Load Balancing using the Azure Application Gateway

- Approach 2: Load Balancing and Failover using Azure API Management

- Approach 3: Buy Provisioned Throughput Units

- Approach 4: The Developer’s Way

- Approach 5: Through a Custom GenAI Gateway

Approaches 1-3 involve little to no development effort, whereas approaches 4-5 necessitate custom development.

This article also serves as an update to the my top 2 most-read articles of 2023: Addressing Azure OpenAI Token Limitations and Load Balancing Azure OpenAI using Application Gateway.

Every service has its limits. With Azure OpenAI, there are general limits, Tokens per Request (TPR) limits per model, and Tokens per Minute (TPM) limits per model and region availability. The table below is a summary of these limits.

| Model (version) | Tokens per Request (TPR)^ | Tokens per Minute (TPM)! | Regions Available+ | Load Balanced TPM per Az Subscription |

|---|---|---|---|---|

| gpt-35-turbo (0613) | 4,096 | 240,000 | 10 (Australia East, Canada East, East US, East US 2, France Central, Japan East, North Central US, Sweden Central, Switzerland North, UK South) | 2,400,000 |

| gpt-35-turbo (1106) | Input: 16,385 Output: 4,096 | 120,000 | 7 (Australia East, Canada East, France Central, South India, Sweden Central, UK South, West US) | 840,000 |

| gpt-35-turbo-16k (0613) | 16,384 | 240,000 | 10 (Australia East, Canada East, East US, East US 2, France Central, Japan East, North Central US, Sweden Central, Switzerland North, UK South) | 2,400,000 |

| gpt-35-turbo-16k (1106) | Input: 16,385 Output: 4,096 | 120,000 | 7 (Australia East, Canada East, France Central, South India, Sweden Central, UK South, West US) | 1,200,000 |

| gpt-35-turbo-instruct (0914) | 4,097 | 240,000 | 2 (East US, Sweden Central) | 480,000 |

| gpt-4 (0613) | 8,192 | 20,000 | 5 (Australia East, Canada East, France Central, Sweden Central, Switzerland North) | 100,000 |

| gpt-4-32k (0613) | 32,768 | 60,000 | 5 (Australia East, Canada East, France Central, Sweden Central, Switzerland North) | 300,000 |

| gpt-4 (1106-preview)* | Input: 128,000 Output: 4,096 | 80,000 | 9 (Australia East, Canada East, East US 2, France Central, Norway East, South India, Sweden Central, UK South, West US) | 720,000 |

| gpt-4 (0125-preview)* | Input: 128,000 Output: 4,096 | 80,000 | 3 (East US, North Central US, South Central US) | 240,000 |

| gpt-4 (vision-preview)* | Input: 128,000 Output: 4,096 | 30,000 | 3 (Sweden Central, West US, Japan East) | 90,000 |

| text-embedding-ada-002 (version 2) | 8,191 | 240,000 | 14 (Australia East, Canada East, East US, East US 2, France Central, Japan East, North Central US, Norway East, South Central US, Sweden Central, Switzerland North, UK South, West Europe, West US) | 3,360,000 |

Data are as of 2024-03-16 (spreadsheet here). See here for the latest limits.

^ If no input/output is indicated, the max TPR is the combined/sum of input+output

! TPM limits vary per region. For simplicity, I selected the lower value.

+ regions where model is available to all subscriptions with OpenAI access

* GPT-4 Turbo Preview, where some have TPM < TPR. Your guess is as good as mine.

This table excludes the legacy, whisper, and TTS models. See official docs for the most up-to-date limits.

Achieving the maximum TPM (last column in the table above) is what these 5 approaches are about.

Approach 1: Round-robin Load Balancing using the Azure Application Gateway

The simplest and most common way to load balance Azure OpenAI endpoints is to use a load balancer. And for Azure, that’s by using the Azure Application Gateway.

Why not Azure Traffic Manager or Azure Load Balancer?

Azure Traffic Manager does not support SSL offloading, which is necessary to connect to the Azure OpenAI endpoints. Additionally, Azure Load Balancer does not support private endpoints, which is needed to integrate with Azure OpenAI via a private virtual network.

Alternatively, Azure Front Door is a viable solution, with a configuration that is similar to Azure Application Gateway.

flowchart LR

A(Client) -.- B

subgraph AppGW[Application Gateway]

B[Frontend IP] -.- C[HTTP/HTTPS<br />listener]

C --> D((Rule))

SPACE

subgraph Rule

D <--> E[/HTTP Setting/]

end

end

subgraph Azure OpenAI

Rule --- F[East US]

Rule --- G[France Central]

Rule --- H[Japan East]

Rule --- I[etc]

end

style SPACE fill:transparent,stroke:transparent,color:transparent,height:1px;

Advantages

- Easy to set up, which primarily involves the infrastructure team.

- Developers are forced to use Azure Managed Identities for authentication, which is more secure.

- Works well with Azure AI virtual networking (also see my post on network hardening).

- The added cost is reasonable considering the benefits.

Potential Challenges

- Simple round-robin load balancing is used per request, which does not provide failover capabilities and does not consider token usage.

- All Azure OpenAI model deployment names must be identical.

- It is assumed that the model/deployment is available at all configured backend pool endpoints.

- The Application Gateway is deployed to a specific region (workaround: Azure Front Door) and introduces an additional network hop.

For a step-by-step guide, see this post.

Approach 2: Load Balancing and Failover using Azure API Management

Another approach is to use Azure API Management (APIM). With APIM policies, you can implement custom load balancing and failover rules tailored to your specific needs.

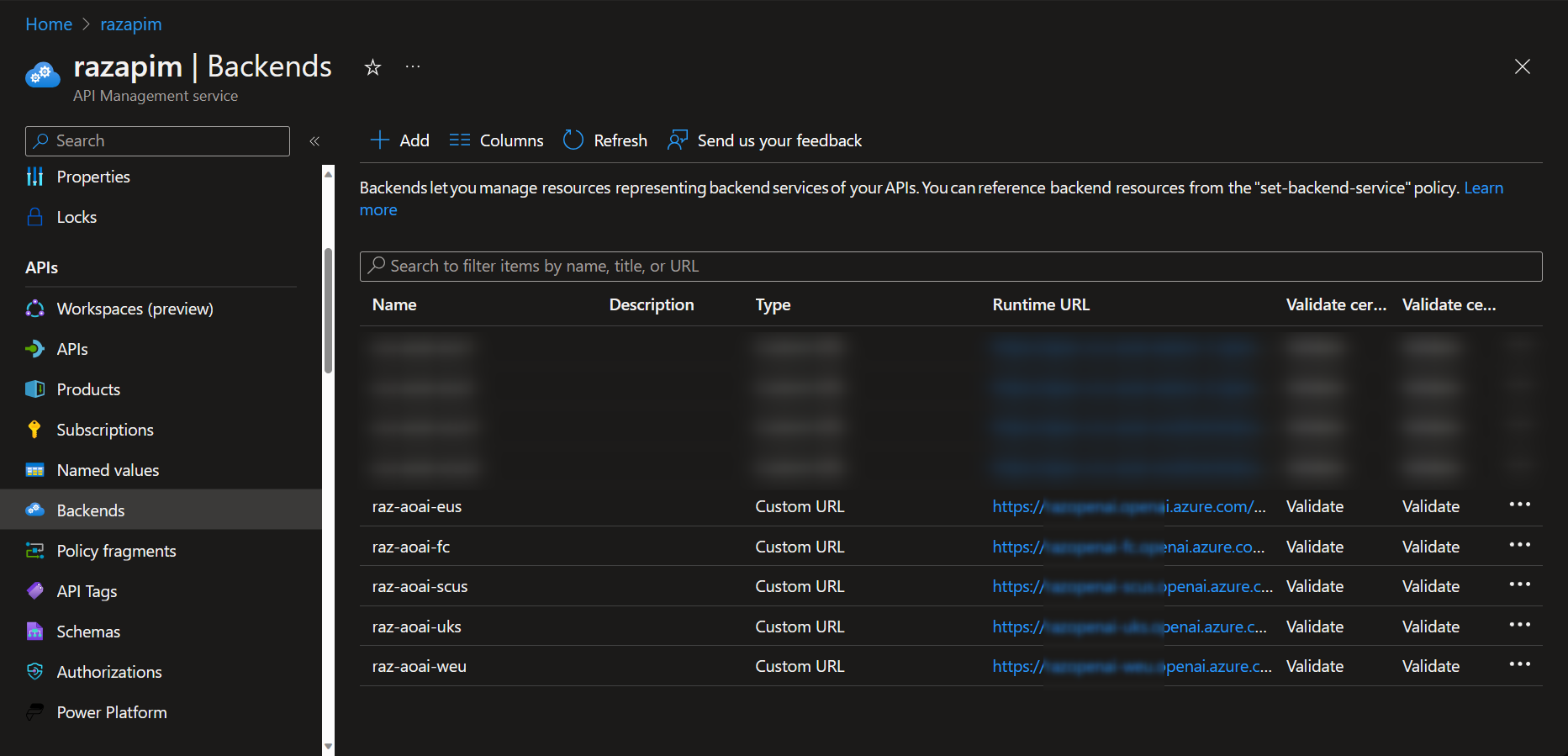

Azure API Management: Backend Configuration

Azure API Management: Backend Configuration

Using Azure API Management solely for load balancing Azure OpenAI may incur higher costs. However, APIM is highly recommended if used for its core purposes — managing and securing APIs effectively across clouds and on-premises environments.

Advantages

- Allows for custom load balancing rules tailored to specific business or technical requirements.

- Seamless retry and failover to another endpoint.

- Enables seamless retry and automatic failover to alternative endpoints. Facilitates the addition of extra logic, such as implementing cost tracking mechanisms for chargebacks.

- Provides separate API keys for each project, even when using a shared pool of OpenAI endpoints.

- Adds an extra layer of security by abstracting the backend pool from clients and developers.

Potential Challenges

- Writing custom policies can be cumbersome as it involves coding in XML+C# directly in a web browser textbox without the benefits of an IDE.

- Implementing DevOps practices with Azure APIM requires this effort.

- APIM payload limits can pose constraints on logging capabilities, particularly if there’s a need to record full prompts and responses.

- More complex policies could degrade performance, potentially necessitating higher service tiers and additional scaling.

- Similar to the Application Gateway, APIM is region-specific and may introduce an extra network hop.

For a step-by-step guide, see this post. My sample policy is also available in this GitHub repo.

Approach 3: Buy Provisioned Throughput Units

For high TPM scenarios, such as B2C applications with high concurrent usage, the most reliable approach is to purchase Provisioned Throughput Units (PTUs). This simplifies the architecture by consolidating it to a single Azure OpenAI service endpoint and increases the reliability of the application.

Advantages

- Guarantees TPM limits in line with business requirements.

- Reduces complexity by managing a single Azure OpenAI service.

- Directly utilizes the most suitable Azure OpenAI region/endpoint, minimizing extra network hops (i.e., selecting the closest region that offers the desired model/version).

Potential Challenges

Available for purchase only through your Microsoft Account Team.PTU purchases are based on a negotiated and agreed-upon time duration (similar to reserved instances).- PTUs are tied to a specific model (or possibly a model-version pair), meaning a change to a different model in the future will necessitate an additional PTU purchase.

- During Dev/Test phases, you will likely still use non-PTU endpoints to assess and decide which model to deploy in production before committing to a PTU purchase.

2024-08-12 Update: As of this date, PTUs may now be provisioned self-service.

Approach 4: The Developer’s Way

If approaches 1-3 are for a cloud engineering team to do, approaches 4-5 are for pro-developers to do. Approach 4 is a guidance to developers for calling any GenAI model through code.

Error Handling

Although using API management can help with retries and failover, it is generally good practice still handle errors by code.

The common error codes to handle are HTTP 429 and 503 (see full list here). These errors can be handled by:

- Retrying a few times up to a minute (i.e. retry every 10 seconds, up to 60 seconds)

- Sending a user-friendly error message to try again (e.g. “Sorry, something went wrong, please try again.”)

- Sending a user-friendly error message that you are restarting the conversation (to clear the conversation history and shorten your prompt)

- Also consider proactively checking the

total_tokensreturned by the completion/chat completion API. Clear the conversation history if it is near the TPR limits.

Optimizing Token Usage

Developers have the responsibility to reduce the number of tokens used per request. Beyond simply setting the max_tokens value, developers should also optimize the system prompt and limit the chat history to conserve tokens.

Windowed Conversations

How many conversations (user queries + response) do you need the application to remember? The longer the conversation memory, the higher the tokens used per request.

One of the strategies is to keep a running window of the latest k conversations. For example, in LangChain, you can use the ConversationBufferWindowMemory to do this:

1

2

3

4

5

6

7

8

9

from langchain_openai import OpenAI

from langchain.chains import ConversationChain

conversation_with_summary = ConversationChain(

llm=OpenAI(temperature=0),

# We set a low k=2, to only keep the last 2 interactions in memory

memory=ConversationBufferWindowMemory(k=2),

verbose=True

)

conversation_with_summary.predict(input="Hi, what's up?")

Summarized Conversations

Another approach is to keep a running summary of the conversation, with a specified max # of tokens.

- Pro: Maintain a summary of the entire conversation, always.

- Con: A completion request to the model is needed to summarize. While this helps keep your prompt within TPR limits, it will affect TPM limits.

An example of this is LangChain’s ConversationSummaryBuffer:

1

2

3

4

5

6

7

8

9

from langchain.chains import ConversationChain

conversation_with_summary = ConversationChain(

llm=llm,

# We set the conversation history to max 200 tokens

memory=ConversationSummaryBufferMemory(llm=OpenAI(), max_token_limit=200),

verbose=True,

)

conversation_with_summary.predict(input="Hi, what's up?")

Also note that not summarization is not helpful for all use cases. Take a food recipe application for example, a user would normally prefer the detailed step-by-step recipe than a 3-sentence summary.

Combination of Windowed Conversations and Summary

You can also combine both approaches, that is to keep a running window of the latest k conversations, while maintaining a summary of the entire conversation.

How you implement this is up to you. See here for an example from LangChain combining both methods shared above.

Make Everything Configurable

There are lots of parameters to play with here:

- The

max_tokensof the model response, - The

kmessages conversation window or themax_tokensof the conversation buffer, and - The

max_token_limitof the conversation summary

Not to mention other parameters depending on your use case.

Important Note: I only used LangChain as an example here because it provides pre-built ways to handle conversation history. Whether you use LangChain or other libraries (like OpenAI or Semantic Kernel) is entirely up to you. See these posts where I talked about my thoughts on using LangChain and Semantic Kernel.

UI Responsiveness

GenAI models do not always respond quickly. In fact, it may be best to assume that it will respond slowly. In general, the larger # of tokens (input and output) you require, the longer the response time.

Therefore, always implement user interface feedback to inform the user that their request is being processed. This feedback could be in the form of a loading spinner or a message indicating that the system is “thinking.”

When feasible, enable streaming by setting stream=True when making a Completion or ChatCompletion call (see how to stream completions). This provides visual feedback to the user that their answer is in the process of being generated.

Approach 5: Through a Custom GenAI Gateway

This is the approach that I’ve seen emerge in the last 5 months or so, and that is to further abstract common requirements into another Web API application rather than implementing these functions in the Orchestrator API itself.

Here are two open-sourced implementations that follow this approach:

- Azure OpenAI Smart Load Balancing (also see blog post)

- LiteLLM Proxy Server (thank you @ishaan-jeff for the comment here)

The GenAI gateway represents a custom Web API service that core teams develop to provide their business units with a platform for use and innovation. Some common capabilities built into this gateway include:

Also see the GenAI Gateway Playbook from Microsoft.

Smart Load Balancing

A common feedback I received since posting on this topic last year was that Azure App Gateway and Azure APIM does not intelligently load balance between OpenAI instances, and is only configured for a specific GenAI model (i.e. GPT-4). As seen here, each model has different region availabilities. And to make it more complicated, some models have different TPM limits depending on the region.

A GenAI gateway with smart-load balancing can:

- Support different backend pools: use the right set of backend pools to load balance that is specific to the model requested.

- Support weighted load-balancing: prioritize the region closest to the user or prioritize the model with purchased Provisioned Throughput Units.

- Support different GenAI models and locations: support non-Azure OpenAI models such as models from Azure AI Studio and models deployed in your GPU cluster.

- Handle common errors: for example, LiteLLM can be configured to handle fallbacks, retries, timeouts, and cooldowns.

Logging Feedback, Prompts and Responses

A GenAI gateway can also provide a standard way to log information and feedback from users for further analysis and improvement of the application. This is useful for:

- Prompt engineering enhancements: analyzing the prompts generated using methods like RAG and the responses that lead to specific user feedback.

- Audit history: providing a record of interactions, a feature often required in highly regulated industries.

Additionally, this data can support various other applications of logs and analytics, such as performance monitoring, user behavior insights, and system troubleshooting.

Important Data Privacy Warning: While Azure OpenAI adheres to specific data privacy agreements, organizations opting to store data in their own systems must be cautious. Prompts and responses handled by these systems could potentially contain sensitive information, especially in applications with high confidentiality requirements, such as an employee HR chatbot. In such cases, conversations may reveal salary details, personal issues, and various complaints. It is crucial for organizations planning to retain this data to not only secure it properly but also to ensure they transparently inform their users about the data storage practices in place.

Cost Management

In addition to its logging features, a GenAI gateway can provide the ability to track token usage, for cost management purposes — such as cross-charging between business units. This can be implemented together with approach 2 and keep track of token usage per Azure APIM Subscription Key.

PII Detection and Handling

Because the GenAI gateway is essentially a custom-developed API application, you can deploy it in a location of your choice. This is beneficial for highly regulated industries with data-in-transit and data-in-use policies require data residency of PII data.

The GenAI gateway can process the input prompts to detect PII data, from within the compliant location where it is deployed in. A common service to detect PII, is to use Azure AI Language Services which is available in many Azure regions.

If PII data is detected, the PII can be masked or redacted before sending the prompt to the GenAI model. The GenAI gateway can also unmask the PII data after receiving the response. Of course, implementing this is not as easy as it sounds. How to mask (and even unmask) requests and responses while ensuring quality is a big challenge. With some organizations that I worked with, they opted to simply reject the GenAI API request if PII is detected in the prompt and let the business unit figure it out.

Here are some sample implementations of PII handling:

- LiteLLM PII Masking

- SecureGenMask: From Semantic Kernel’s Dec 2023 hackathon

Azure OpenAI stores prompts and responses for 30-days for abuse monitoring (data-at-rest for 30 days). See here for more information, including how to be exempted.

Flexible Deployment Options

A GenAI gateway is usually lightweight, giving the flexibility to be deployed in a number of ways that suit your specific needs.

- Single- or Multi-region deployment: the GenAI gateway can be deployed to multiple regions that is closer to your end-users. It can also be configured so that it priorizes the OpenAI regions that it is closest to. The GenAI gateway architecture can be designed so that it uses a shared storage to keep track of token usage per region.

- Shared or separate server: if minimizing extra network hops is a priority, the GenAI gateway can be deployed on the same server/service/cluster as the orchestrator API.

- Centralized gateway or Decentralized utility: instead of deploying as a traditional gateway, why not create a reusable utility container image instead? Each new GenAI app can then configure and deploy this pre-built image separately. (Note: This is an actual implementation by a financial institution with >5 production chatbots to-date.)

And more capabilities…

By now, I’m sure you get the drift. Since this is custom code, you decide if a specific business or technical requirement should be implemented in the orchestrator API or the GenAI gateway. Here are some additional ideas that can be implemented at this level:

- APIs for embeddings,

- APIs for storing knowledge bases used for RAG applications,

- APIs for accessing custom or fine-tuned GenAI models (not specific to Azure OpenAI),

- APIS that can be used for function calling or ChatGPT plugins.

These are just a few examples of the many capabilities that a GenAI gateway can provide. The key takeaway is that the GenAI gateway is a custom-developed API service that can be tailored to meet the specific needs of your organization.

Conclusion: Use Multiple Approaches

In conclusion, although you might initially focus on addressing token limitations, it’s likely that your requirements will extend beyond this. The approaches discussed above can be effectively combined to meet these broader needs.

Thank you for reading this far! I tried to make this post short but with enough detail on the points, but it still turned out long… so thank you and I hope you found this useful!